at Tokyo University of the Arts Class in 2014 “Interactive Music 2”

General Info

The sound takes visual’s infomation, the visuals draw sound’s wave. Each of information are transformed interactively.

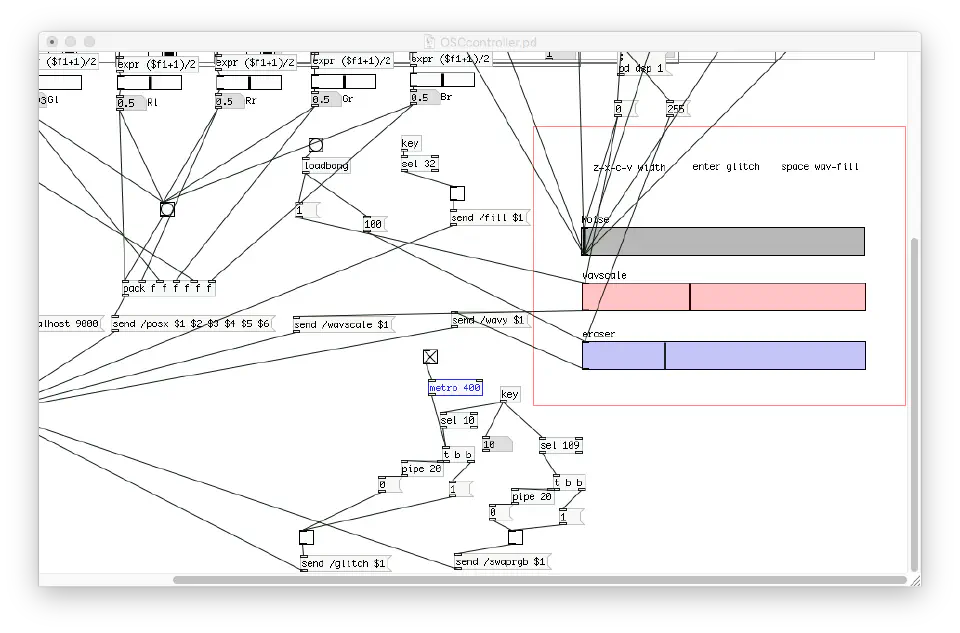

Application was developed with Openframeworks (both of sound and visual). GUI was made with Puredata.

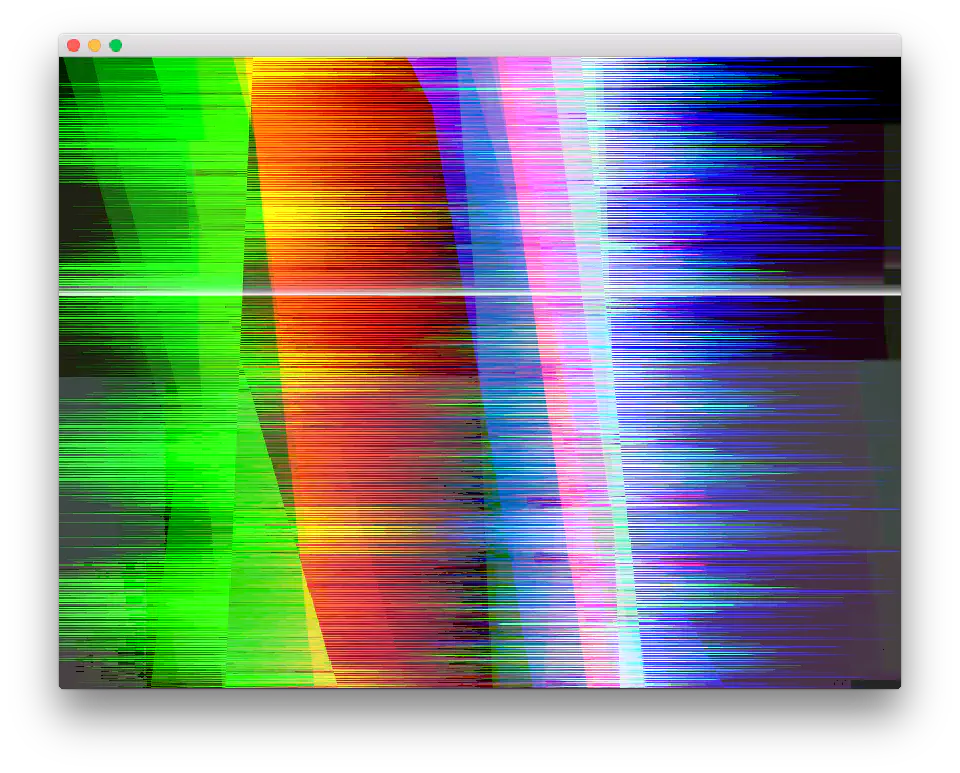

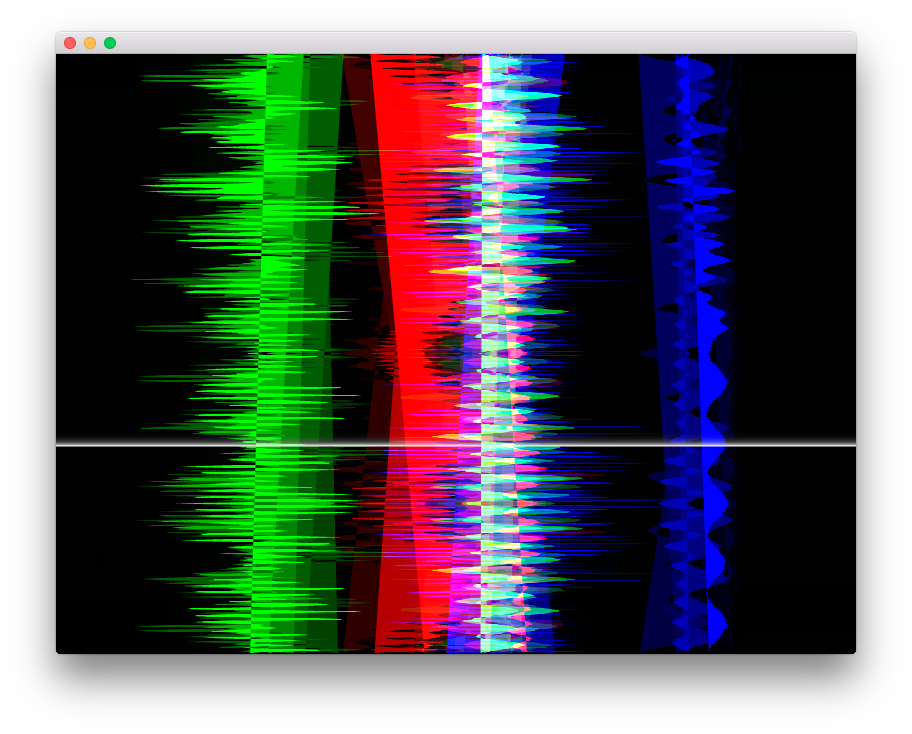

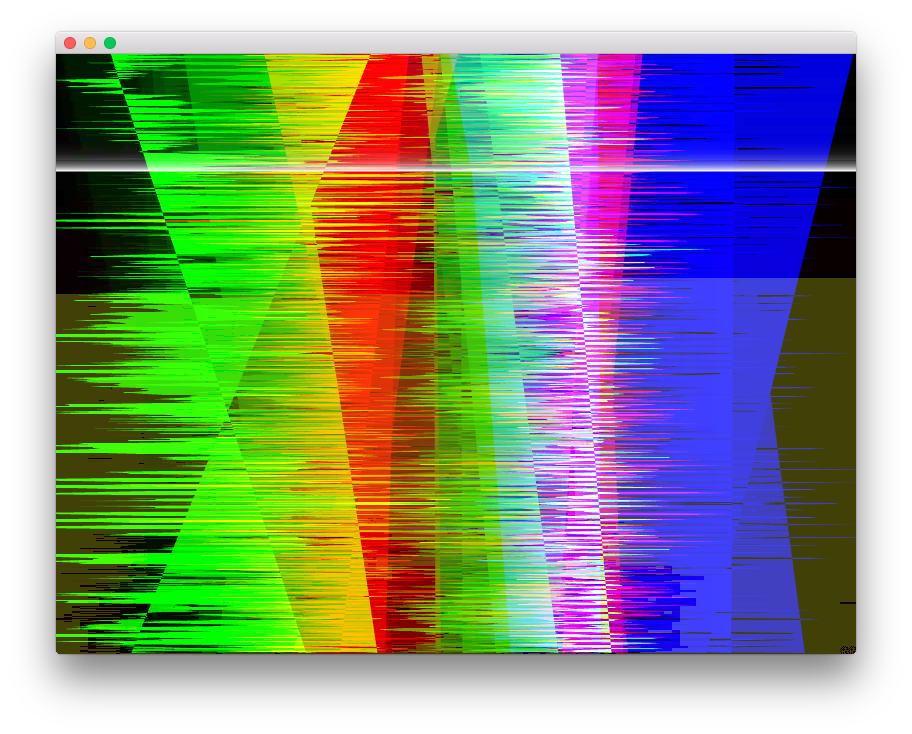

Screenshot

Pd patch

System

Audio→Visual

The visual was constructed with 6 waveform, 2*RGB. Actually there are 6ch audio but each RGB waveform was summed and send to Lch and Rch.

Horizontal drawing position, Waveform amplitude, Filling of waveform can be controlled by Puredata. Also Visual can be JPG glitched in any situation.

Visual→Audio

White Holizontal line seeks upper side to bottom side.

In each frame, Holizontal pixels at the position of white line was scanned and its left half, right half and each of RGB amplitude was taken as Audio Spectrum.

Each Spectrum were processed with Inverse FFT and processed waveform is put to audio buffer.

Technical tips

The buffer size and pixel-array size was converted using ofFbo.

The framerate and the buffer rate (sampling rate/buffer size) should be the same. The memory access to audio buffer at the same time from both of visual side and audio side should be avoided, it crashes app.

Asynchrounous communication such as mutex should have been used in this situation but I did’nt used because I didn’t know much about that. Thus the framerate was forced to (sampling rate/buffer size). It doesn’t crash frequently, maybe, because visual side accesses to 1-frame delayed buffer from audio side.